Immune repertoire sequencing provides an extraordinary in-depth exploration of T and B cell receptors, which are crucial actors in human immunity. This has opened a vast number of research avenues in many fields and continues to do so. However, high-throughput sequencing data analysis can be challenging and requires data handling solutions that can deal with large data sizes, alignment of untemplated receptors, and error correction among others.

Accurate clone annotation is paramount to ensure the downstream analysis is sound and the results advance your research. That is why we developed our own Rep-Seq annotation tool at ENPICOM, called IGX-Profile. This app has been designed to facilitate analysis in a flexible, simple, scalable, and user-friend way. When we came across a dataset that would allow us to verify the accuracy of our receptor annotation, we decided to give it a go and benchmark our IGX-Profile tool. The data comes from a publicly available benchmark dataset (Khan et al, Science Advances 2016, DOI: 10.1126/sciadv.1501371) that includes a predefined mix of receptors, which are perfect for validation.

The dataset

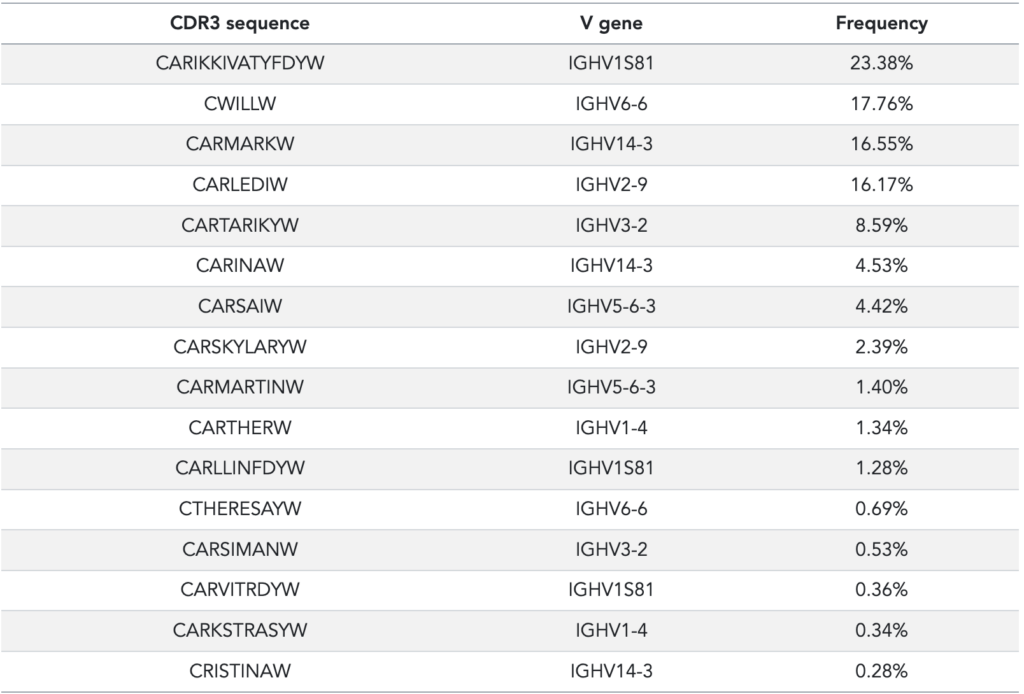

The published AIRR (adaptive immune receptor repertoire) sequencing dataset consists of a set of 16 spike-ins that were specifically designed and synthesized to test immune repertoire sequencing and processing. In the original publication, these spike-ins were used as control sequences with distinct abundances so that any quantitative differences could be traced (so-called ‘amplification bias’). The spike-ins have been designed with unique CDR3 sequences so that they can be easily identified (see Table 1).

Table 1: Data from Khan et al, supplementary material, table S6. The quantification of the spike-ins has been performed with singleplex PCR. Please refer to the original publication for details.

The amplification steps before the sequencing were performed with a home-brew multiplex approach that introduces unique molecular identifiers (UMIs) in both the Forward and Reverse orientation (FID and RID, respectively). The authors use a custom method called Molecular Amplification Fingerprinting to analyze these spike-in samples and compensate for any quantitative differences that arise during amplification. Five technical replicates of the same spike-in mix were sequenced with MiSeq 2 x 300 bp. The primary data (fastq files) are available in SRA.

Processing with IGX-Profile

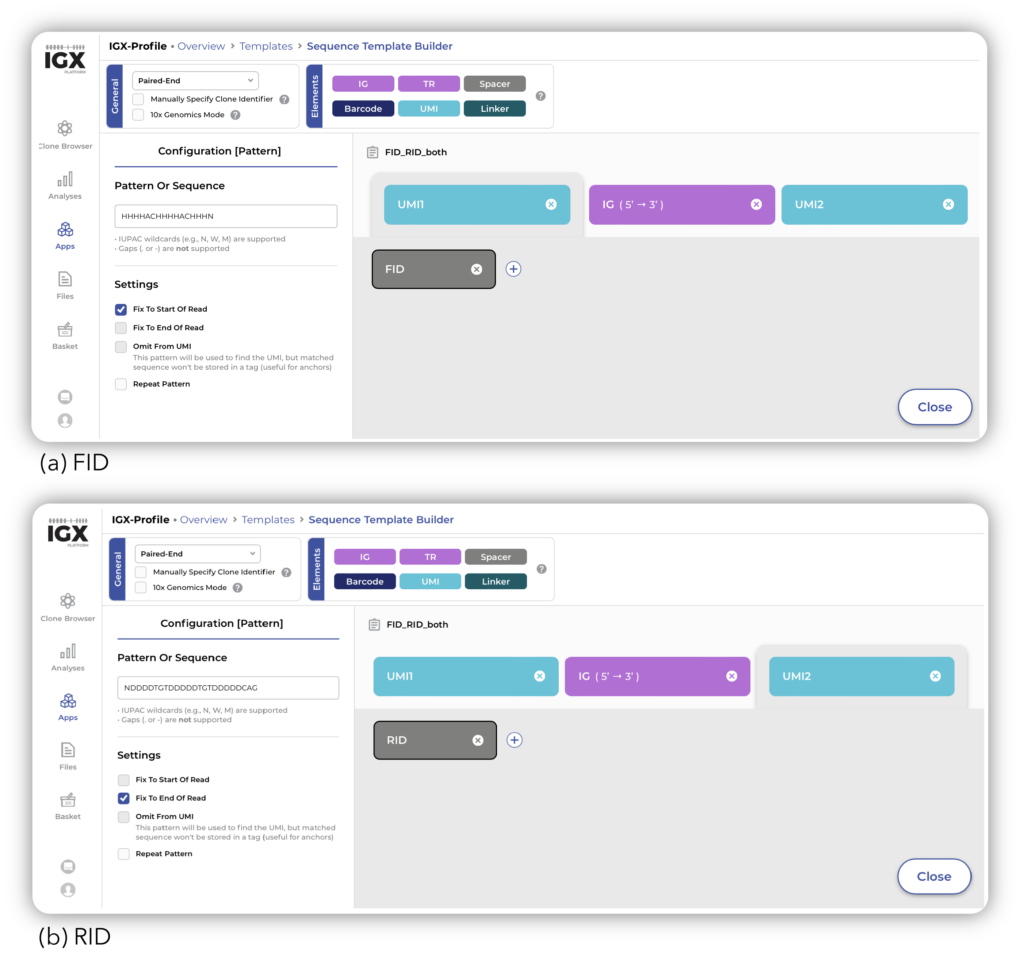

The five spike-in mix samples were obtained from SRA, uploaded onto the IGX Platform, and processed with IGX-Profile. Using the Sequence Template, we defined the amplicon structure that captured both FID (HHHHACHHHHACHHHN) and RID (NDDDDTGTDDDDDTGTDDDDDCAG) in the processing of the samples (Figure 1). Including these UMIs allowed for a better estimation of the original number of receptor sequences that were in the sample before amplification took place – providing more accurate clonal frequency results.

Figure 1: Definition of FID and RID as UMI in IGX-Profile.

In addition to the UMI constructs, another consideration is the level of quality filtering to apply. This can be done in several ways, depending on specific issues that affect a dataset. Since this dataset appears to have a consistent quality and no other eyebrow-raising features, no QC filters or UMI cutoffs have been applied – any filtering step inevitably leads to fewer receptors being recovered, so in this case less really is more.

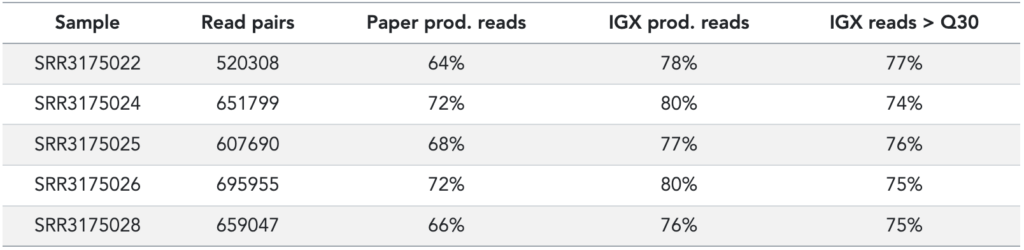

Using these FID and RID UMI constructs in the reads, with and without QC filters or UMI cutoffs, we recover slightly more immune receptors (~10%) from the raw sequencing data than the original publication did (see Table 2).

Table 2: Processing stats from original publication (based on Table S1) and IGX-Profile. Read pairs is the number of reads in the FASTQ files; IGX reads > Q30 means the percentage of reads that have an average Phred score > 30.

Identification of spike-in sequences

The original publication highlights that many of the identified sequences were in fact mutated when compared to the original spike-ins. Furthermore, germline reference databases are updated frequently, so the V-gene annotations have likely diverged from the ones the researchers referred to. Therefore, we match the sequences from our processed output to the original spike-in sequences that we have obtained from the paper. This can be done in several ways, but for our purposes we choose two methods:

- Exact match by CDR3 amino acid sequence only – this is a lenient method and annotates ~90% of all sequences as spike-in sequences.

- Exact match by annotated spike-in receptor nucleotide sequence – this is a strict method that annotates ~60% of all sequences as spike-in sequences.

To match the nucleotide sequence of the spike-in, we compare the sequences from the processed receptors to those of Table S8 of the paper’s supplementary material. The spike-in receptor sequences are all 500nt long; the receptors recovered from the processed data are all shorter (336nt – 360nt), as expected. Thus, the requirement for a match is that that the entire processed receptor sequence matches a section of the spike-in sequence from Table S8.

Regardless of using the lenient or strict methods, we are able to detect find all spike-ins in every sample – a promising initial step!

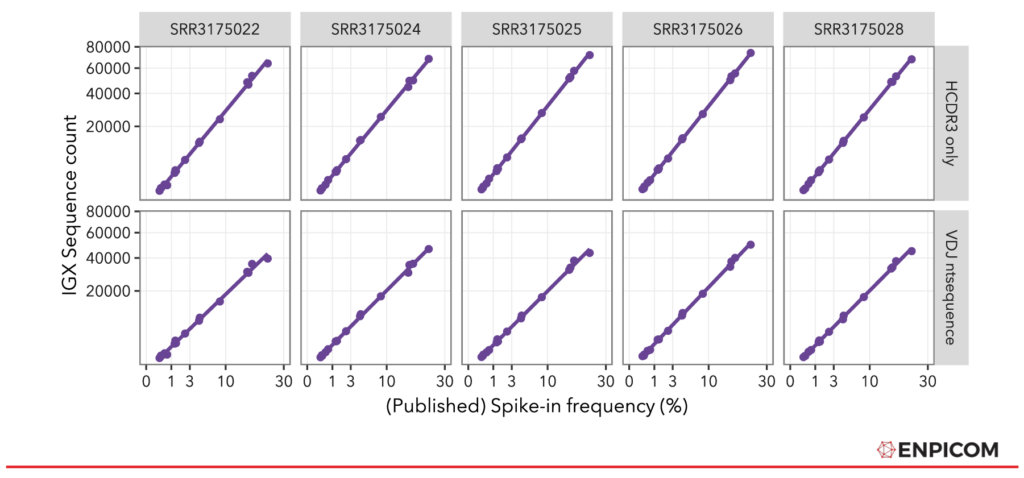

Comparing sample counts to spike-in frequencies

With the spike-ins mapped, we compared them to the original spike-in frequencies, depicted in Figure 2. The spike-in counts as quantified by IGX-Profile are mapped against the original spike-in frequencies from the paper, separately for all 5 replicates. The processed results align very well with the original frequencies, both for the lenient (CDR3 only) and the strict (whole nt sequence) spike-in annotation method. Note that the differences in counts are due to the different number of read pairs that are in the samples (see also Table 2).

Figure 2: Frequency of published spike-in frequencies compared to IGX-Profile-processed Sequence Counts.

Comparing IGX-Profile to the original paper’s MAF method

So how does IGX-Profile compare to the original MAF method? This method is introduced in the paper specifically for recovering the original spike-in frequencies. Briefly, the MAF method applies a custom mathematical model to the forward and the reverse UMI counts (FID and RID, respectively) to compensate for amplification biases that may have been introduced during library preparation and sequencing. The MAF method thus provides a more accurate estimate of sequence counts than do uncompensated counts, as the original results show in Khan et al, Figure 4. IGX-Profile uses UMI processing to achieve the same goal, and hence we can compare these two analysis tools for their ability to compensate for amplification biases in these samples.

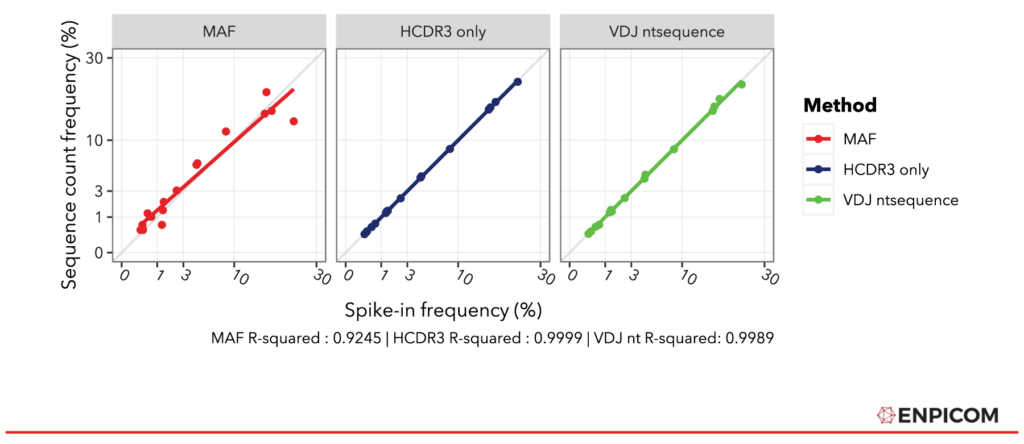

Conveniently, the average MAF mean and standard deviation (sd) values are given in the paper (Table S6). To compare the methods, all values are re-normalized into count fractions to compensate for differences in read pairs per sample, percentage productive reads (IGX-Profile is a bit more sensitive), and method count ranges. The normalized values should therefore match the original spike-in fractions and line up on the y = x diagonal – that is, if the methods fully recover the quantitative differences between the spike-in sequences. The results are depicted in Figure 3. The plots show that both IGX methods line up with the diagonal almost perfectly – 4 digits are required to see the difference between the IGX-Profile methods. The MAF approach does not achieve the same accuracy but fits still well with an R² of 0.9245. Additionally, we see that the regression lines of the IGX-Profile fits are closer to the diagonal; the slopes of the IGX fractions are spot-on (both 1), while MAF’s is 0.89, indicating that it overestimates the counts of lower-frequency spike-ins – or an underestimation of the higher-frequency spike-ins.

Figure 3: Comparison of MAF to strict and lenient IGX-Profile processing. Values for all methods normalized to fractions of total counts. Goodness of fit has been quantified in R with lm().

Coefficient of variation

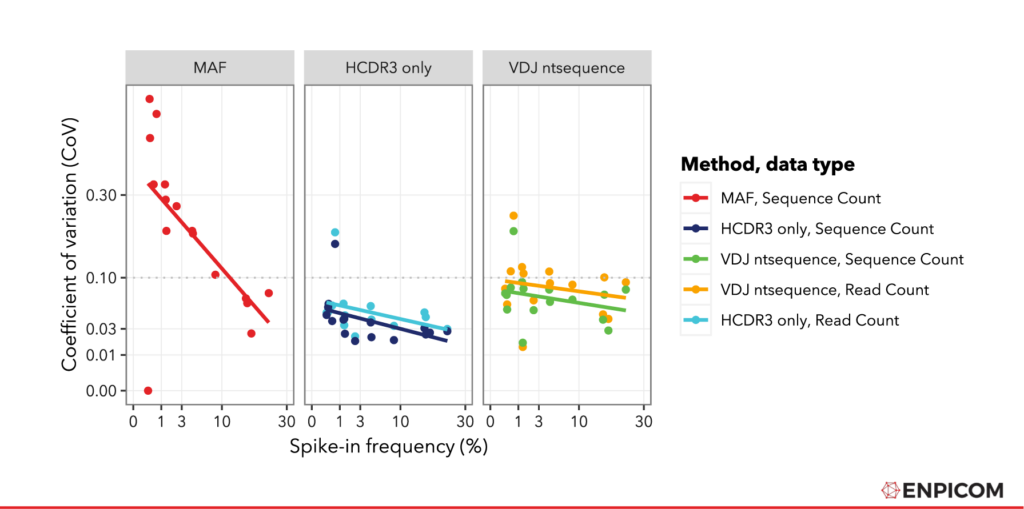

Finally, we decided to compare the precision of the normalized counts using the coefficient of variation (CoV) , which is the standard deviation divided by the mean. This is a measure for of how much signal is obtained relative to the variability in the outcome (I guess some call it ‘noise’). For CoV: lower is better, we want as little variation and as much signal as possible. The values are plotted in Figure 4.

For all methods, we see a few expected trends. For instance, the CoV is lower for high-abundance sequences; conversely, low-abundance measurements have more variability which is a common feature of NGS data . Furthermore, we see that the CoV is larger for raw read counts (plotted as Read Count) than for counts that have been corrected using FID and RID (UMI) processing (plotted as Sequence Count). This is consistent with the UMIs effect on sequence quantification accuracy.

We see that the CoV is lowest for the CDR3 matching approach. This is probably due to the higher counts obtained (more spike-in matches in the sample) that are present in this approach, which increase the mean but have no significant effect oin the variation. Furthermore, we see that the CoV values for IGX-processed data are generally lower than the MAF values. To quantify this, we can use an (arbitrary) cutoff of 0.1 (dotted grey line in ) and quantify how many points are under this line. We see that with the MAF method from the paper, five spike-ins have a CoV of lower than 0.1, and eleven do not. With IGX-Profile, even for the ‘Full nt sequence’-approach and with Read Count, we see that ten spike-ins have a CoV lower than 0.1 and six do not. For all other IGX-Profile quantification methods in all samples, the CoV is under the 0.1 threshold for all spike-ins except one. This indicates that using UMI with IGX-Profile gives a lower variance compared to other methods, even if these are based on more complex UMI configurations.

Figure 4: Coefficient of Variation plotted as a function of the spike-in frequency. Regression lines were fitted with R using lm() . For IGX-Profile processing, both read- and sequence- level data were plotted.

Outcomes

So, what have we learned after this quick spike-in analysis?

- It was easy to reprocess these benchmark samples due to IGX-Profiles flexible configuration that captured both the spike-ins and complex UMI’s they contained.

- IGX-Profile identified more spike-in sequences with better higher accuracy than the original MAF method. This is independent of how the spike-ins are annotated: since both with a lenient and with a strict matching methods, the results are better than the original.

- Analysis of the coefficient of variation shows that the use UMI for reducing amplification bias and error correction improves the precision of quantification.

All in all, this re-analysis demonstrates the rapid advancements being made in repertoire sequencing. Many of the challenges faced in the past have been largely overcome, resulting in a technology providing more accurate and reliable results compared to those achievable just a few years ago.

Interested to learn how it can be applied in your research? Talk to our experts today!

Written by:

| Dr. Henk-Jan van den HamResearch Team Lead at ENPICOMAs a Research Team Lead at ENPICOM, Henk-Jan focuses on the latest developments in immunology and bioinformatics, particularly in the analysis of adaptive immune repertoire. With a PhD in Theoretical Immunology & Bioinformatics and post-doc experience in virology, Henk-Jan is responsible for leading academic and industry projects, collaborations, and grant applications in the intersection of immunology, bioinformatics, and software engineering. |