AI is an incredibly powerful tool, but not every life sciences researcher is an AI expert.

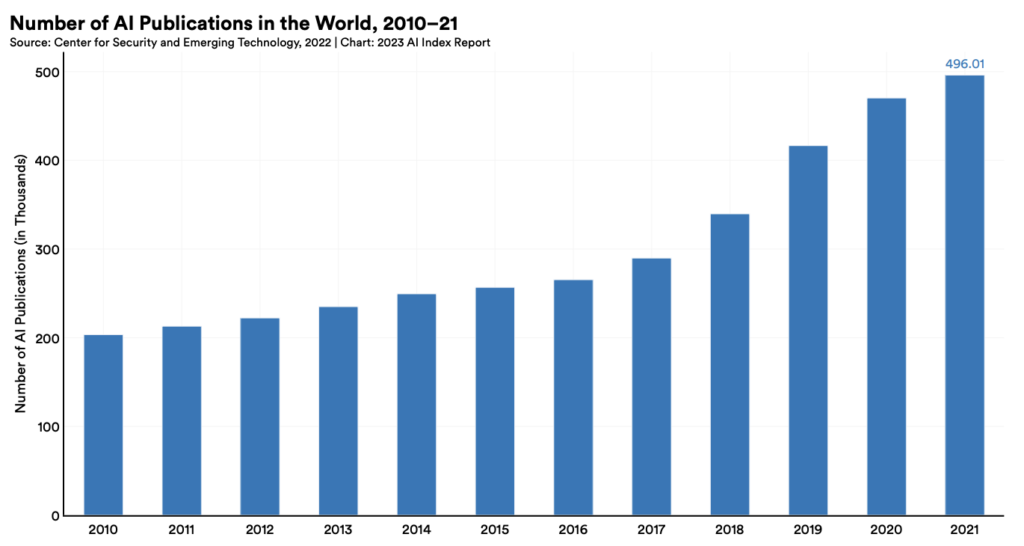

Biomedical research is at a critical juncture where legacy data from multiple sources can be harnessed by AI models to propel unparalleled innovation and discovery. Biotech companies are taking notice: two-thirds of pharmaceutical companies are boosting investments in IT and AI in 2024.¹ The number of AI publications has also more than doubled between 2010 and 2021, demonstrating the emphasis on research and development of AI and its applications worldwide.²

Despite this opportunity, there are many challenges to fully integrating AI analyses and predictions into traditional research workflows, including drug discovery. And even when specific hurdles or gaps are overcome, organizational inefficiencies can prevent research programs from maximizing the potential benefits of AI modeling across departments and disciplines.

Organizations need to address several challenges in order for personnel to fully leverage the power of AI in biomedical research programs now and in the future.

Harmonizing data collection and integration

AI has revolutionized our ability to analyze vast quantities of data from a variety of different sources. Life sciences data is typically generated from many different platforms and often exists publicly, in-house, and in different formats and dimensions.

In order to maximize the efficiency of data analysis, disparate datasets need to be cleaned, sorted, and aggregated into a format that AI algorithms can use for analysis. Critically, biopharma bench scientists can use up to 49% of their time manually retrieving and transforming data,³ illustrating the potential efficiency of automated data processing.

By standardizing data units, formats and standards, research organizations effectively democratize their data, allowing different teams to freely access and utilize data to gain valuable development and problem-solving insights. Importantly, harmonized data is also available for efficient downstream analysis when advances in AI modeling may detect new insights, relationships, or patterns within datasets.

Scalable storage architecture

Many organizations overlook the impact of shared, optimized data storage on an AI system. Separate data storage systems between research labs and core facilities at smaller institutions worked well before vast amounts of data could be sifted and analyzed with AI. Now, however, the simple act of moving datasets and models between different storage sites can significantly impact the time and power required and overall efficiency of AI analysis.

Organizational data storage systems, when optimized and designed for scalability, can significantly improve the productivity of an AI system. Increased data generation over time results in increased overhead costs associated with managing that data in terms of energy consumption, operational costs, and personnel time. By optimizing data storage and access on an organizational scale, researchers can improve their GPU-hour output, saving time and money.

Tracking and managing AI-model lifecycle

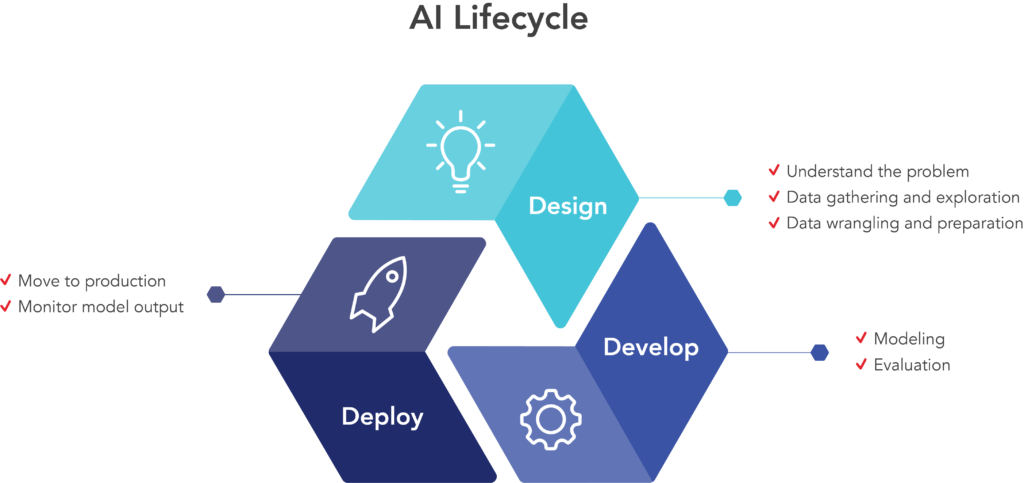

The AI lifecycle is the process of solving a problem with AI. The steps in this process can be divided into the design, develop, and deploy stages (Figure 2), where investigators design the problem-solving strategy, develop and refine AI models, and deploy the effective solution, respectively. These steps are often optimized into a set of machine learning operations, or MLOps, which clearly define the methods used to automate and simplify AI workflows and deployment throughout the entire organization.

Figure 2. The three stages of the AI lifecycle: design, develop and deploy. MLOps improve the efficiency of the AI lifecycle by optimizing ML workflows and deployment based on scalability and other organizational requirements.⁴

Traditional research and development performed without AI modeling requires close tracking and management to ensure operational efficiency, consistency, reproducibility, and traceability. R&D that leverages AI systems requires this level of scrutiny and more. Each step in the AI lifecycle requires continuous oversight to ensure AI analysis:

- Performs as trained

- Reaches an accurate outcome

- Solves the problem

Structured AI-model lifecycles track and manage each stage of the process, prioritize the reproducibility of the model, and emphasize insights gained between experiments. Lifecycles and MLOps can also improve project adaptability and agility when requirements and parameters inevitably change. These frameworks also allow teams to stay current in project developments and changes, increasing the project’s efficiency and probability of success over the long term.

Organizational AI-lifecycle frameworks can also:

- Improve solution quality by enforcing evaluation standards

- Streamline workflows by outlining personnel responsibilities at each stage

- Mitigate risk by highlighting potential problems

- Balance resource allocation by identifying time, personnel, and computing requirements at the beginning of each stage

Integrating AI inference

AI is capable of making predictions or conclusions based on new data. These AI inferences are based on datasets where an AI model is trained using desired inputs, outputs, or both. For instance, an AI model may be trained to generate the optimal protein sequence for binding a particular epitope. The model predicting the structure of a new amino acid sequence using an entirely new epitope is AI inference.

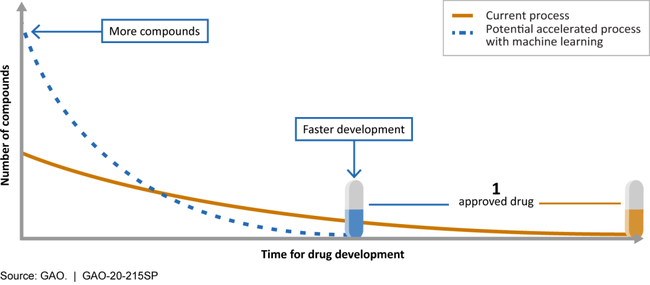

Generative AI can accelerate discovery pipelines by more accurately predicting molecular structures and interactions based on immense amounts of training data and increasingly sophisticated models. By integrating these AI inferences into the discovery pipeline, research programs can decrease the cost, time, and resources required to develop a new drug before clinical trials (Figure 3). Some research suggests that AI could reduce the development time for a new drug by 40-50%.⁵

Figure 3. AI can improve the drug development process by increasing the number of molecules considered for a particular application and shortening the time required to develop an optimized drug. https://www.gao.gov/products/gao-20-215sp

By automatically integrating AI inferences into the drug discovery pipeline, research teams are provided with the most accurate molecular interaction predictions at the start of the drug discovery process. This allows scientists to invest resources in the most promising candidates from the very start, saving time and money.

AI model accessibility

Researchers in the life sciences are accustomed to highly technical information, but not all scientists are AI or computer programming experts. In order for personnel to take full advantage of AI analysis and inference, AI applications require user-friendly access to the latest AI models embedded into the same workflows they use for analysis. An easy-to-use, intuitive user interface (UI) bridges the divide between advanced computation and user competence, empowering researchers with little to no programming or advanced computing or experience to use AI to its full potential.

Additionally, accessing the most current AI models allows scientitsts to analyze data more efficiently without worrying about ML model version tracking and complexities of running ML workflows. This accelerates decision-making and enhances biologics discovery and lead optimization processes.

The solution: unified AI integration platforms

Integrating AI into research pipelines can involve many hurdles, depending on the workflows associated with each organization’s specific applications. Fortunately, unified AI integration platforms, like ENPICOM’s solution, were developed to overcome these challenges and bridge the gaps faced by drug development companies to improve AI efficiency from the very start.

Integration platforms increase AI efficiency by harmonizing old and newly generated data, optimizing storage accessibility at scale, providing AI lifecycle-management software, integrating generative inferences into discovery pipelines, and improving AI user interfaces enterprise-wide. Contact us to discover how we can seamlessly integrate AI into your workflows, enhancing your research capabilities and outcomes.

References

- Buntz, B. (December 14, 2023). Two-thirds of pharma companies plan to up IT investments in 2024, survey finds. Drug Discovery & Development. Accessed July 2, 2024.

- Nestor Maslej, Loredana Fattorini, Erik Brynjolfsson, John Etchemendy, Katrina Ligett, Terah Lyons, James Manyika, Helen Ngo, Juan Carlos Niebles, Vanessa Parli, Yoav Shoham, Russell Wald, Jack Clark, and Raymond Perrault, “The AI Index 2023 Annual Report,” AI Index Steering Committee, Institute for Human-Centered AI, Stanford University, Stanford, CA, April 2023.

- Understanding and managing the AI lifecycle. CoE. Accessed June 24, 2024.

- Insights from the 2022 State of Digital Lab Transformation Industry Survey. (2022.) Tetrascience. Accessed June 24, 2024.

- Gordon, C. (February 23, 2024.) Using AI to modernize drug development and lessons learned. Forbes. Accessed June 24, 2024.